Before anyone publishes anything in a reputable science journal, their article has to pass ‘peer review’. It is thus a process of evaluating the work of scientists by external experts, i.e. peers. This function renders peer review crucial for checking the validity of science. Even though this seems both necessary and commendable, there has been plenty of criticism of the method.

How does peer review work?

Peer review is a form of self-regulation by qualified members of a profession within the relevant field and normally involves multiple steps:

- The authors send their manuscript to a journal of their choice and ask the editor to consider it for publication.

- The journal editor takes a good look at it to decide whether to reject it straight away (for instance, because the subject area is not of interest to the journal) or whether to send it out to referees for examination.

- The referees (usually 2 or 3) then have the opportunity to refuse or accept the invitation to review the submission.

- If they accept, they review the paper and send their reports to the editor (usually according to a deadline set by the editor).

- Based on these reports, the editor now tries to come to a decision about publication; if the referees are not in agreement, a further referee might have to be recruited.

- Even if the submission is potentially publishable, the referees will usually have raised several points that need addressing. In such cases, the editor sends the submission back to the original authors asking them to revise their manuscript.

- The authors would then do their revision and re-submit their paper.

- Now the editor can decide to either publish it or send it back to the referees asking them whether, in their view, their criticisms have been adequately addressed.

- Depending on the referees’ verdicts, the editor makes the final decision and informs all the involved parties accordingly.

- If the paper was accepted, it now goes into production.

- When this process is finished, the authors receive the proofs for final a check.

- Eventually, the paper is published and the readers of the journal finally have a chance to scrutinise it.

- This frequently prompts comments which the editor might decide to publish in the journal.

- In this case, the authors of the original paper would normally get invited to write a reply.

- Finally, the comments and the reply are published in the journal side by side.

The whole process takes time, sometimes lots of time. I have had papers that took around two years from submission to publication. This delay seems tedious and, if the paper is important, unacceptable (if the article is not important, it should arguably not be published at all). Equally unacceptable is the fact that referees are expected to do their reviewing for free. The consequence is that many referees do their reviewing not as thoroughly as might be expected.

The reviewer’s perspective

At Exeter, I had more than enough opportunity to see the problems of peer review from the reviewers perspective. At a time, I used to accept doing about 5 reviews per week, roughly 60% for the many (often somewhat dubious) journals of alternative medicine and the rest for mainstream publications; in total, I surely have reviewed well over 1000 papers.

I often recommended inviting a statistician to do a specialist review of the stats. Only rarely were such suggestions accepted by the journal editors. Often I recommended rejecting a submission because it was simply too poor quality, and occasionally, I told the editor that I had a strong suspicion of the paper being fraudulent. The editors very often (I estimate in about 50% of cases) ignored my suggestions and published the papers nonetheless. If the editor did follow my advice to reject a paper, I regularly saw it published elsewhere later (usually in a less well-respected journal).

With ‘open’ peer review (where the authors are being told who reviewed their submission), infuriated authors sometimes contacted me directly (which they are not supposed to do) after seeing my criticism of their paper. Occasionally this resulted in unpleasantness, once or twice even in threats (from them, I hasten to add). Eventually I realised that improving the standards of science in the realm of alternative medicine was a Sisyphean task, became quite disenchanted with the task and accepted to do fewer and fewer reviews. Today, I do only very few – maybe one or two per month.

The author’s perspective

I also had, of course, the opportunity to experience the peer review process from the author’s perspective. Most scientists will have suffered from unfair or incompetent reviews and many will have experienced the frustrations of the seemingly endless delays. Once (before my time in alternative medicine) a reviewer rejected my paper and soon after published results that were uncannily similar to mine. In alternative medicine, researchers tend to be very emotionally attached to their subject. I once received reviewer’s comments which started with the memorable sentence: “This article is staggering in its incompetence…”

The editor’s perspective

As the founding editor of three medical journals, I have had 40 years of experiencing peer review as an editor. This often seemed like trying to sail between the devil and the deep blue sea. Editors naturally want to fill their journals with the best science they can find. But, all too often, they receive the worst science they can imagine. They are constantly torn by tensions pulling them in opposite directions. And they somehow have to cope not just with poor quality submissions but also with reviewers who miss deadlines and do their work shoddily.

The investigator’s perspective

Finally, I also had the pleasure to do some research on the topic of peer review. Sometime in the mid-1990s, when such research was still highly unusual, we were invited to the offices of the BMJ where the then BMJ-editor, Richard Smith, discussed with us the idea of doing some research on aspects of the peer review process. Together with the late John Garrow, we designed a randomised trial and conducted a proper study. Once we had written up the results, an ironic thing occurred: our paper did not pass the peer review process of the BMJ. So, eventually we published it elsewhere. Here is its abstract:

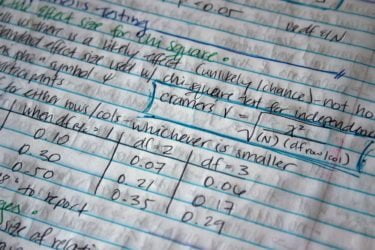

A study was designed to test the hypothesis that experts who review papers for publication are prejudiced against an unconventional form of therapy. Two versions were produced (A and B) of a ‘short report’ that related to treatments of obesity, identical except for the nature of the intervention. Version A related to an orthodox treatment, version B to an unconventional treatment. 398 reviewers were randomized to receive one or the other version for peer review. The primary outcomes were the reviewers’ rating of ‘importance’ on a scale of 1-5 and their verdict regarding rejection or acceptance of the paper. Reviewers were unaware that they were taking part in a study. The overall response rate was 41.7%, and 141 assessment forms were suitable for statistical evaluation. After dichotomization of the rating scale, a significant difference in favour of the orthodox version with an odds ratio of 3.01 (95% confidence interval, 1.03 to 8.25), was found. This observation mirrored that of the visual analogue scale for which the respective medians and interquartile ranges were 67% (51% to 78.5%) for version A and 57% (29.7% to 72.6%) for version B. Reviewers showed a wide range of responses to both versions of the paper, with a significant bias in favour of the orthodox version. Authors of technically good unconventional papers may therefore be at a disadvantage in the peer review process. Yet the effect is probably too small to preclude publication of their work in peer-reviewed orthodox journals.

How can we improve peer review?

Unfortunately, there are few easy solutions to the many problems of peer review. It often seemed to me that peer review is the worst idea for checking the science that is about to be published… except for all the other ideas. If peer review is to survive – and I think it probably will – there are a few things that could, from my point of view, be done to improve it.

We should make it much more attractive to the referees. Payment would be the obvious way to achieve this; the big journals like the BMJ, LANCET, NEJM, etc. could easily afford that. But recognising reviewing academically would, in my opinion, be even more important. At present, academic careers depend strongly on publications; if they also depended on reviewing, experts would queue up to act as referees.

The reports of the referees could get independently evaluated according to sensible criteria. These data could then be conflated and published as a marker of academic standing. Referees who fail to do a reliable job would thus spoil their chances of getting re-invited for this task and, in turn, of getting the academic promotions they are aiming at.

We need to speed up the entire review process. Waiting months on months is hugely counter-productive for all concerned. Fortunately, most journals are already doing what they can to make peer review acceptably swift.

Today many journals ask authors for the details of potential reviewers of their submission. Subsequently, they send the paper in question to these individuals for peer review. I find this quite ridiculous! No author I know of has ever resisted the temptation to name people for this purpose who are friends or owe a favour. Journals should afford the extra work to identify reviewers who are the best independent experts on any particular subject.

Of course, none of my suggestions are simple or fool-proof or even sure to work at all. But surely it is worth trying to get peer-review right. The quality of future science seems to depend on it.