Placebo effects are typically discussed in the context of drug trials, where sham pills and potions are claimed to induce healing through the power of belief.

I remain unconvinced that there is any real, meaningful, clinical effect that can be described as a ‘placebo effect’. The improvements observed in the placebo groups of clinical trials can be sufficiently explained by several known factors, including statistical effects, psychological influences, normal immune responses, external factors (like taking an unrelated medication at the same time), and even just straightforward mistakes.

In 2018, the BBC’s Horizon series aired a documentary titled The Placebo Experiment: Can My Brain Cure My Body? Presented by the late Michael Mosley, the programme made several claims of seemingly miraculous improvements attributed to the placebo effect. For this article, we are concerned with just one of these claims: the effects of placebo surgery.

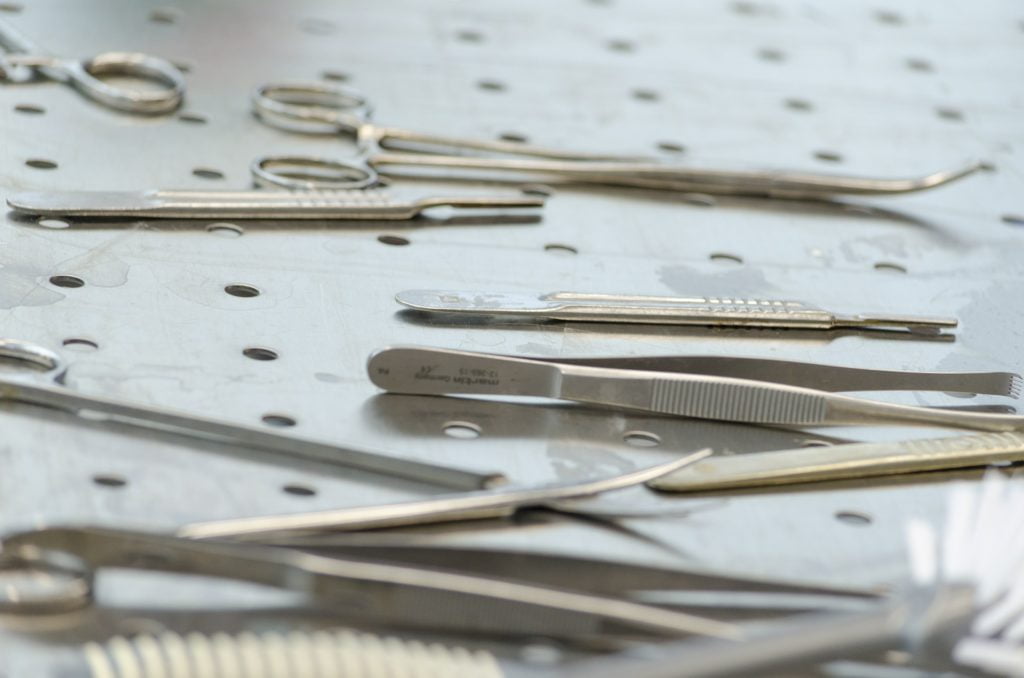

In a drug trial, we could typically take a group of patients, randomly assign each patient to get either the drug or a placebo, and then examine the differences in health outcomes between the two groups. In a placebo-controlled surgical trial, the process is much the same. Patients suffering with some condition (for example, osteoarthritis) are recruited and randomly assigned to get either surgery or sham surgery.

Patients in the surgery group are given the real surgical procedure, as per usual practice. Patients in the sham group are prepped as normal, taken to theatre as normal, and anaesthetised or sedated as normal. Incisions are still made and for an arthroscopic (key hole) procedure, the scope is even inserted as normal. After a simulated surgery, patients are sewn up and sent to recover, without any meaningful surgical intervention having taken place.

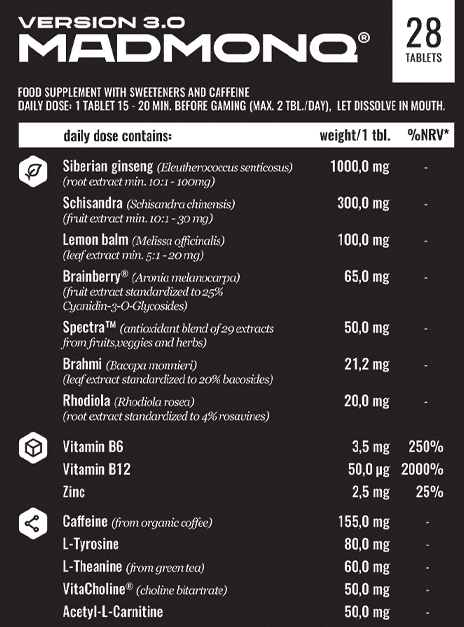

For Horizon, Michael Mosley spoke with a surgeon named Andrew Carr, attached to Oxford University, who took part in such a study. The operation, known as an acromioplasty, involved the removal of soft tissue and bone spurs from the shoulder, in the expectation that it would relieve pain. In the sham condition, incisions were made and a scope was inserted, but no material was removed.

This study was published by Beard et al in The Lancet in 2018, and ultimately involved over 300 patients and 51 surgeons. It concluded that there was no significant difference in pain relief or functional improvement between the surgery and sham groups.

Several years earlier, the New England Journal of Medicine had published a similar study. Moseley et al (no relation) took 180 patients suffering with osteoarthritis of the knee and randomised them to get either arthroscopic débridement (the removal of damaged tissue), arthroscopic lavage (flushing with water), or sham surgery.

Moseley reported: “at no point did either of the intervention groups report less pain or better function than the placebo group […] the outcomes after arthroscopic lavage or arthroscopic débridement were no better than those after a placebo procedure.”

The straightforward interpretation of these studies is that, since surgery failed to outperform sham in both cases, the procedures are ineffective. Arthroscopic débridement and lavage do not treat osteoarthritis of the knee. Acromioplasty does not alleviate shoulder pain. These operations should therefore be discontinued as they do not provide a meaningful therapeutic benefit.

Instead, due to the peculiar influence the placebo topic has on scientific rigour, there came calls for sham surgeries to replace real ones. Rather than viewing these studies as evidence that the surgeries themselves are ineffective, some interpret the findings as proof of the placebo’s effectiveness.

The Canadian science communicator Jonathan Jarry coined a term for this convoluted interpretation: the ShamWow Fallacy. This is when experts, invested in the power of the placebo, interpret negative outcomes as evidence of the power of placebos.

A systematic review in the British Medical Journal in 2014 found that, in about half of the studies, surgery failed to outperform placebo. Interestingly, it also found that in 74% of trials there was a beneficial therapeutic effect within the placebo group. On the face of it, this could present a problem. Shamwow effect aside, if it is the case (as I contend) that the placebo effect is an illusion, how do we account for the improvements observed in the placebo groups of surgical trials?

Beard illustrates this nicely. In contrast to many studies, Beard included three groups: surgery, sham surgery, and no treatment. Although surgery performed no better than sham, both surgical groups performed better than no treatment. So, does that prove that placebo surgery really does work?

Sadly, no. For one thing, any no-treatment control is necessarily unblinded. Patients who do not get surgery are aware they are getting no surgery, and this is likely to influence any patient-reported outcomes. Patients are less likely to report an improvement when they are aware they have undergone no intervention; this is why medical studies use blinding in the first place.

Beard also notes that while the difference between surgery and no treatment was statistically significant, it was not clinically important. That is to say, although there is an improvement on paper, it does translate to any meaningful change in the quality of life of the patient.

Perhaps most important is the fact that, in both Beard’s and Moseley’s studies, patients receiving surgery were also given post-operative physiotherapy to help support their recovery. Since physiotherapy is also a treatment for both shoulder pain and osteoarthritis, this likely contributed to the observed improvements. Patients were, in fact, being given a second treatment after the first, a parallel intervention. This is exactly the sort of external factor which can cause an illusion of a therapeutic placebo effect, if we are not careful in how we interpret our data.

As for Andrew Carr, according to Horizon he discontinued acromioplasty operations after the study he was part of demonstrated they were ineffective. His current recommendation? Physiotherapy.